10/07/2025. Modern bot traffic in 2025 is nothing like the clunky scripts of a few years ago. Sophisticated, AI-driven attackers routinely slip past bot detection systems that worked in 2023, exposing blind spots in defenses and turning false confidence into real risk.

TLDR:

- Automated bots now account for ~51% of web traffic, with ~37% classified as malicious, many of which evade older detection systems

- Behavioral biometrics analyzing keystroke patterns and mouse movements achieve 87% accuracy vs 69% for Google reCAPTCHA

- Challenge-based CAPTCHAs waste 35 seconds per user

- Sophisticated AI-powered bots can now solve many CAPTCHA challenges with human-level or better accuracy in selected cases

- Multi-layered detection combining behavioral analysis, device fingerprinting, and ML provides the best protection

The State of Bot Traffic in October 2025

The numbers from 2025 paint a sobering picture of the internet's bot problem. According to the latest

Imperva Bad Bot Report, automated bots now make up over 51% of all web traffic. These are sophisticated, hard-to-detect automated systems that can mimic human behavior with alarming accuracy.

What's particularly concerning is that malicious bots account for 37% of all internet traffic. These aren't the simple scrapers and spam bots of yesteryear. Generative AI has completely changed bot development, allowing less sophisticated actors to launch higher volume, more targeted attacks than ever before.

The shift toward API-directed attacks represents another major evolution. In the latest Imperva report, API-targeted attacks made up ~44% of advanced bot traffic, targeting the backbone of digital services rather than web interfaces.

Understanding Bot Detection Fundamentals

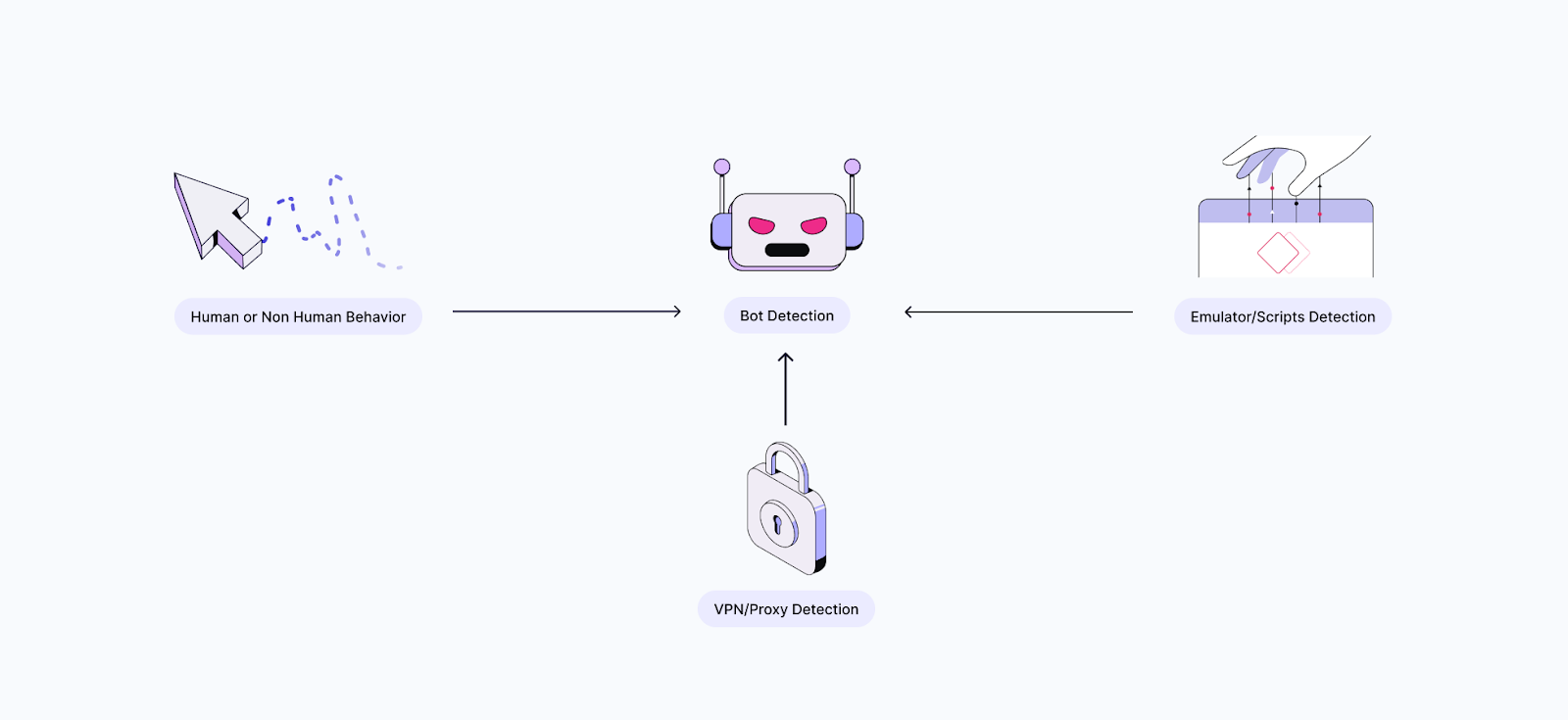

Bot detection is the process of identifying automated traffic and distinguishing it from legitimate human users. At its core, bot detection software analyzes incoming requests, user behavior, and interaction patterns to determine whether traffic originates from a human or an automated system.

Bad bots execute account takeover attacks, credential stuffing campaigns, carding fraud, and distributed denial-of-service attacks. They scrape pricing data, manipulate inventory systems, and flood applications with fake accounts.

But here's what makes bot detection particularly challenging: not all bots are malicious. Search engine crawlers, monitoring services, and legitimate automation tools serve important functions. Effective bot detection must distinguish between beneficial automation and malicious activity without blocking legitimate users or helpful bots.

Core Bot Detection Methods and Technologies

Behavioral Analysis and Pattern Recognition

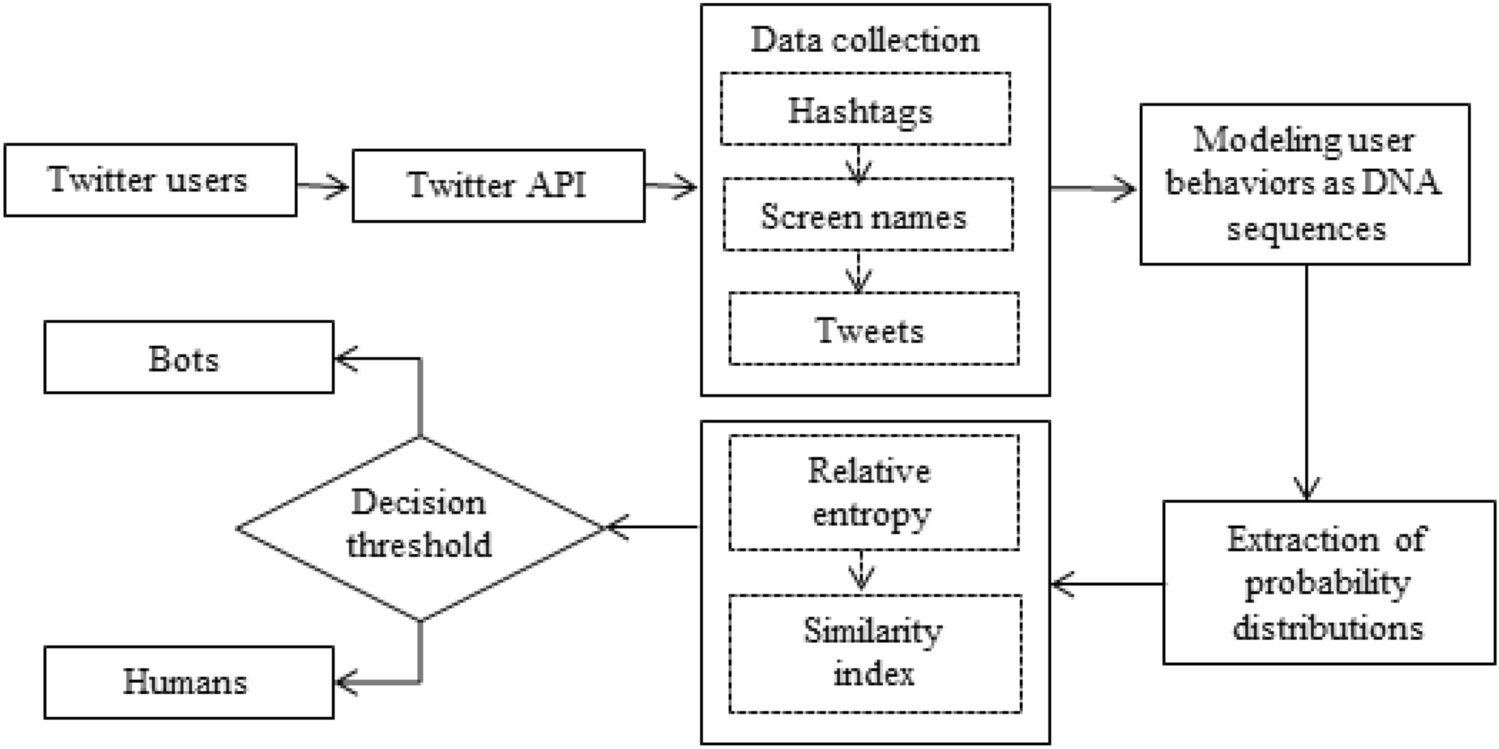

Behavioral analysis represents the cutting edge of bot detection technology. This approach monitors user interactions like mouse movements, keystroke patterns, scrolling behavior, and navigation flows to identify anomalies that indicate automated activity.

The key insight is that bots tend to exhibit linear, predictable movement patterns. A human user might move their mouse in a slightly wavy line from point A to point B, with small hesitations and course corrections. A bot typically moves in perfectly straight lines or follows mathematically precise curves.

Keystroke dynamics offer another rich source of behavioral data. Humans have unique typing patterns, the time between keystrokes, the pressure applied, and the rhythm of typing varies based on familiarity with words and cognitive load. Advanced behavioral biometrics systems can detect when typing patterns are too consistent or lack the natural variations that characterize human input.

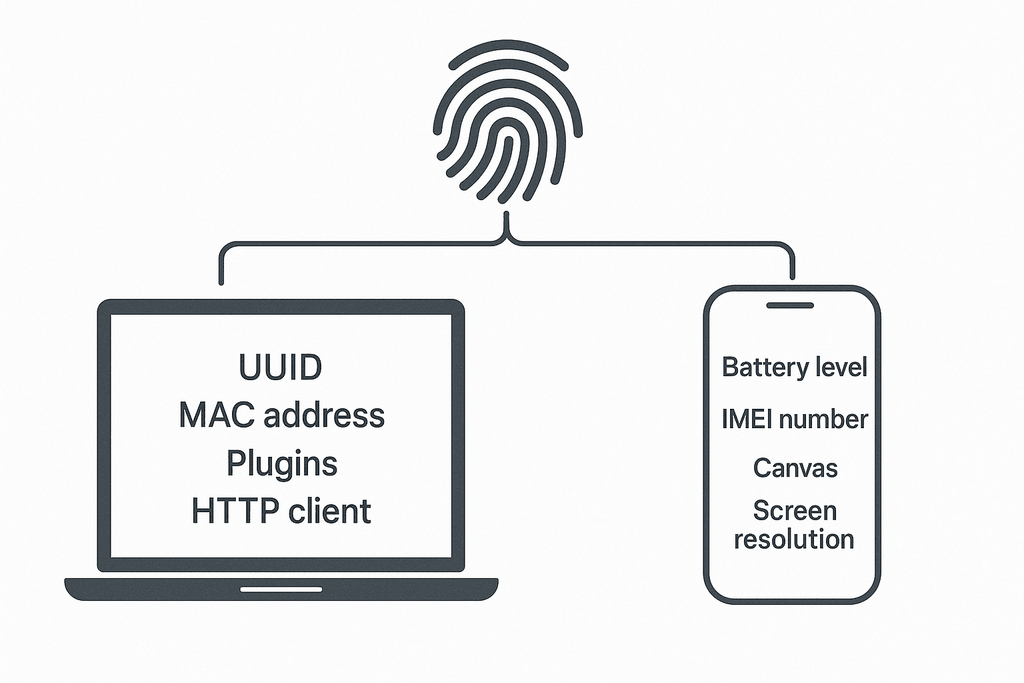

Device Fingerprinting and Environmental Analysis

Device fingerprinting creates unique identifiers for users based on their device characteristics, browser configuration, and network environment. This technique analyzes HTTP headers, screen resolution, installed fonts, timezone settings, and dozens of other environmental factors to build a complete device profile.

Fingerprinting works without cookies, making it particularly valuable for tracking suspicious activity across sessions. When a bot farm operates from similar infrastructure, fingerprinting can identify patterns in device configurations that reveal automated activity.

This technique can reveal improbable attribute combinations (e.g. a claimed mobile device with desktop-like resolution or traffic appearing from different geographic locations but sharing identical device fingerprints), which may raise suspicion in a probabilistic scoring model.

Advanced fingerprinting systems also analyze network-level characteristics like TCP fingerprints, TLS handshake patterns, and connection timing. These low-level signals are difficult for bots to manipulate and provide additional layers of detection power.

AI and Machine Learning Detection

Modern bot detection increasingly relies on AI and machine learning to identify and adapt to new threats. ML-based detection systems improve detection when encountering previously unknown bot behaviors, creating a defense system that evolves with new threats.

The power of ML-based detection lies in its ability to identify subtle patterns that human analysts might miss. These systems can process thousands of behavioral signals simultaneously, weighing the importance of each factor and combining them into risk scores that accurately distinguish bots from humans.

Modern systems often use ensembles of multiple ML models, e.g. one for mouse movement and one for typing, which tends to improve detection robustness. One model might specialize in analyzing mouse movement patterns, another in keystroke timing, and a third in session-level behavioral flows. The combination of these specialized models creates a complete detection system that's difficult for bots to evade.

Challenge-Based Prevention

Traditional CAPTCHA systems and challenge-based prevention methods have become increasingly problematic in 2025. These systems force users to solve puzzles, identify images, or complete other tasks to prove they're human.

The fundamental issue with challenge-based systems is that they create friction for legitimate users while failing to stop sophisticated bots. Users spend an average of about 35 seconds completing CAPTCHA challenges, which creates a major barrier to conversion and user satisfaction.

Meanwhile, AI-powered bots can now solve many CAPTCHA challenges with human-level or better accuracy in selected cases. Image recognition challenges, text-based puzzles, and even audio CAPTCHAs can be defeated by machine learning models trained for these exact tasks.

Industry-Specific Bot Detection Challenges

Different industries face unique bot threats that require tailored detection approaches. Financial services, healthcare, and e-commerce sectors experience the highest volumes of malicious bot traffic due to their reliance on APIs for critical operations and the high value of the data they protect.

- The travel industry faces bots scraping pricing information, manipulating inventory availability, and executing sophisticated booking fraud schemes.

- In e-commerce/retail, bad bot traffic can dominate. Imperva reports that bad bot traffic can reach ~59% of overall traffic, one of the highest among industries. These bots engage in price scraping, inventory hoarding, sneaker bot attacks, and fake review generation.

- Financial services face sophisticated account takeover attempts and credential stuffing attacks where bots test stolen login credentials across multiple accounts.

- Healthcare organizations deal with bots attempting to harvest patient data, manipulate appointment systems, and exploit telehealth services.

Roundtable's Approach to Modern Bot Detection

Roundtable tackles the limitations of traditional bot detection through a new approach we call "Proof of Human." Our solution functions as an invisible CAPTCHA that analyzes behavioral patterns to distinguish real users from bots without creating any friction for legitimate users.

The core of our approach lies in

behavioral biometrics analysis. We monitor subtle behavioral signals like keystroke dynamics, mouse movement patterns, and interaction timing to build a complete picture of user authenticity.

Our system achieved

87% bot detection accuracy in head-to-head testing, greatly outperforming Google reCAPTCHA (69%) and Cloudflare Turnstile (33%). This performance advantage comes from our focus on behavioral signals that are difficult for bots to replicate convincingly.

What sets Roundtable apart is our commitment to explainable results. Instead of providing opaque risk scores, our system offers clear, interpretable explanations for why a session was flagged as potentially automated. Security teams can see exactly which behavioral patterns triggered an alert, making it easier to fine-tune detection settings and investigate suspicious activity.

Cognitive science research identifies the subtle differences between human and automated behavior. We analyze micro-patterns in user interactions that reflect human cognitive processes: the small hesitations, corrections, and irregularities that characterize genuine human behavior.

The system operates continuously throughout user sessions, providing real-time "Proof of Human" scoring that adapts as users interact with applications. This continuous monitoring approach catches bots that might initially appear human but reveal automated characteristics over time.

FAQ

How accurate are modern bot detection tools compared to traditional CAPTCHAs?

Modern behavior-based bot detection greatly outperforms traditional CAPTCHAs, with leading solutions achieving 87% accuracy versus Google reCAPTCHA's 69% and Cloudflare Turnstile's 33%. Traditional CAPTCHAs also create friction for users while AI-powered bots can now solve many CAPTCHA challenges with human-level or better accuracy in selected cases.

What behavioral signals do advanced bot detection systems analyze?

Advanced systems monitor keystroke dynamics, mouse movement patterns, scrolling behavior, interaction timing, and navigation flows to identify automated activity. Humans exhibit natural irregularities like curved mouse paths and varied typing rhythms, while bots typically display linear, predictable patterns that are mathematically precise.

Can behavioral biometrics work without collecting personal user data?

Yes, effective behavioral biometrics can be implemented without storing direct identifiers. The analysis looks at how users interact with interfaces rather than who they are, maintaining privacy while providing accurate bot detection.

Final thoughts on protecting your site from sophisticated bot attacks

In recent years, bot detection has changed dramatically. Traditional methods simply can't keep up with AI-powered threats that now dominate web traffic. Your users deserve protection that doesn't slow them down with annoying challenges while actually stopping the bots that matter.

Roundtable's approach delivers that balance through invisible behavioral analysis that works behind the scenes. The future of bot detection lies in solutions that adapt as quickly as the threats they're designed to stop.